When schedule disruptions hit, rerunning a full mixed‑integer optimizer can take longer than the business can wait.

Graph neural networks offer a lightweight alternative: they learn the structural cues that signal which pairwise swaps will unlock the largest objective gains, enabling near‑real‑time repairs that keep service levels and costs on target without a complete re‑solve.

Why executives should care

- Seconds instead of minutes. A GNN‑guided local search can cut recovery time after a disruption by an order of magnitude versus running the full MILP again (arXiv).

- Keeps quality high. Experiments on job‑shop and routing benchmarks show learned swap policies close 90‑98 % of the optimal gap while using <10 % of the compute budget (ScienceDirect, arXiv).

- Extends existing solvers. You keep your proven optimizer; the GNN simply recommends high‑value swaps before you call the heavy solver or when timeouts hit.

Business use cases

- Nurse weekly schedule rosters: suggest staff‑to‑shift swaps when sick calls arrive at 04:00.

- Factory sequencing: insert a hot order by re‑sequencing two machines, not 200.

- Last‑mile delivery: reroute one van after a traffic closure without re‑running the fleet model.

- Cloud capacity slots: swap VM allocations to respect power caps during brown‑outs.

Live Demo of Ability to Swap Nurses

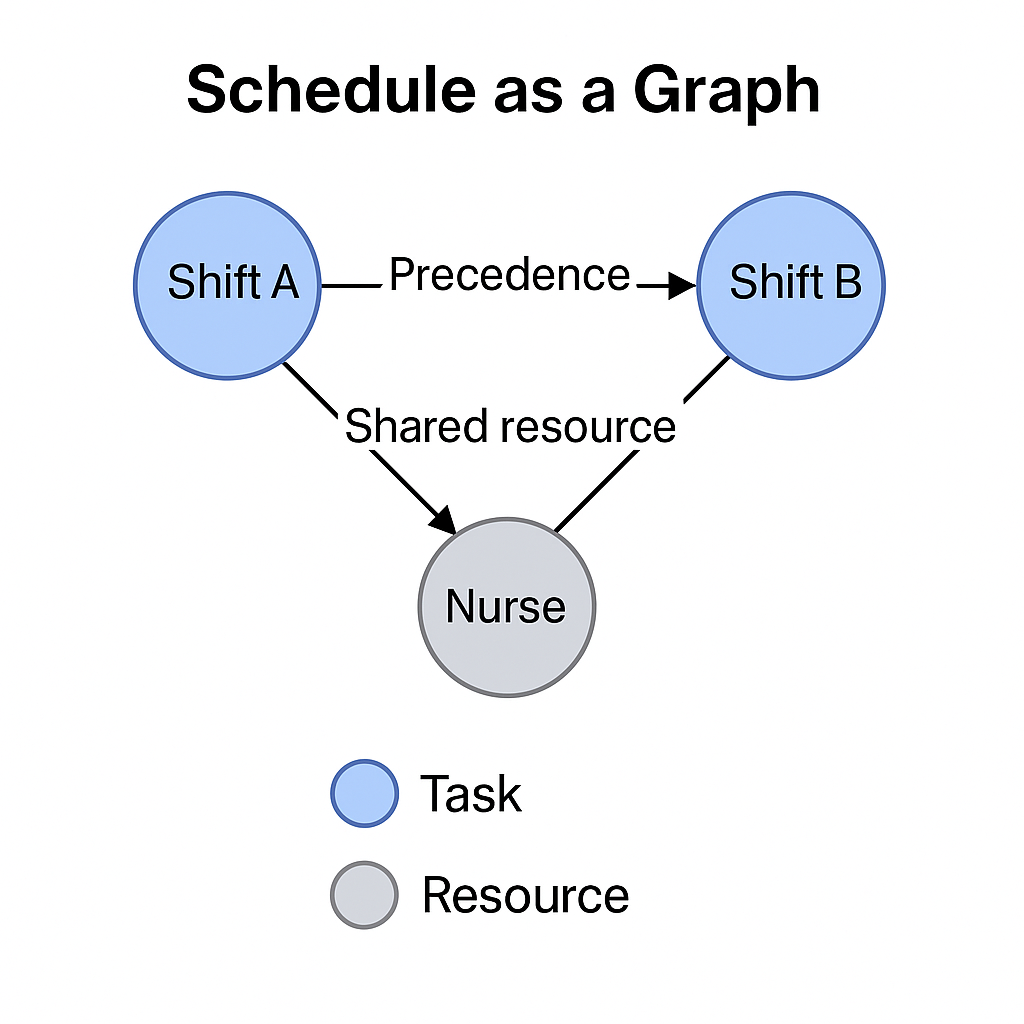

Schedule as a graph

- Nodes = tasks, shifts, or resources.

- Edges = precedence, overlap, or shared resource constraints.

- Features = start time, duration, skill labels, objective gradients.

The GNN message‑passing layers embed local and global structure so the network can evaluate the downstream impact of a candidate swap.

Above is a simple visual that shows how a schedule turns into a graph: tasks (blue) connect to shared resources (gray) and precedence arrows capture order.

Training the model

- Generate supervision

- Run the exact solver on historical instances; log the best‑improving swaps along the branch‑and‑bound path.

- Or frame it as reinforcement learning over a Markov decision process where actions are swap operators (NeuroLS pattern) (arXiv).

2. Label each candidate with the observed objective delta.

3. Loss: rank‑based (pairwise margin) or regression on improvement.

4. Curriculum: start with small instances, progressively scale to production size.

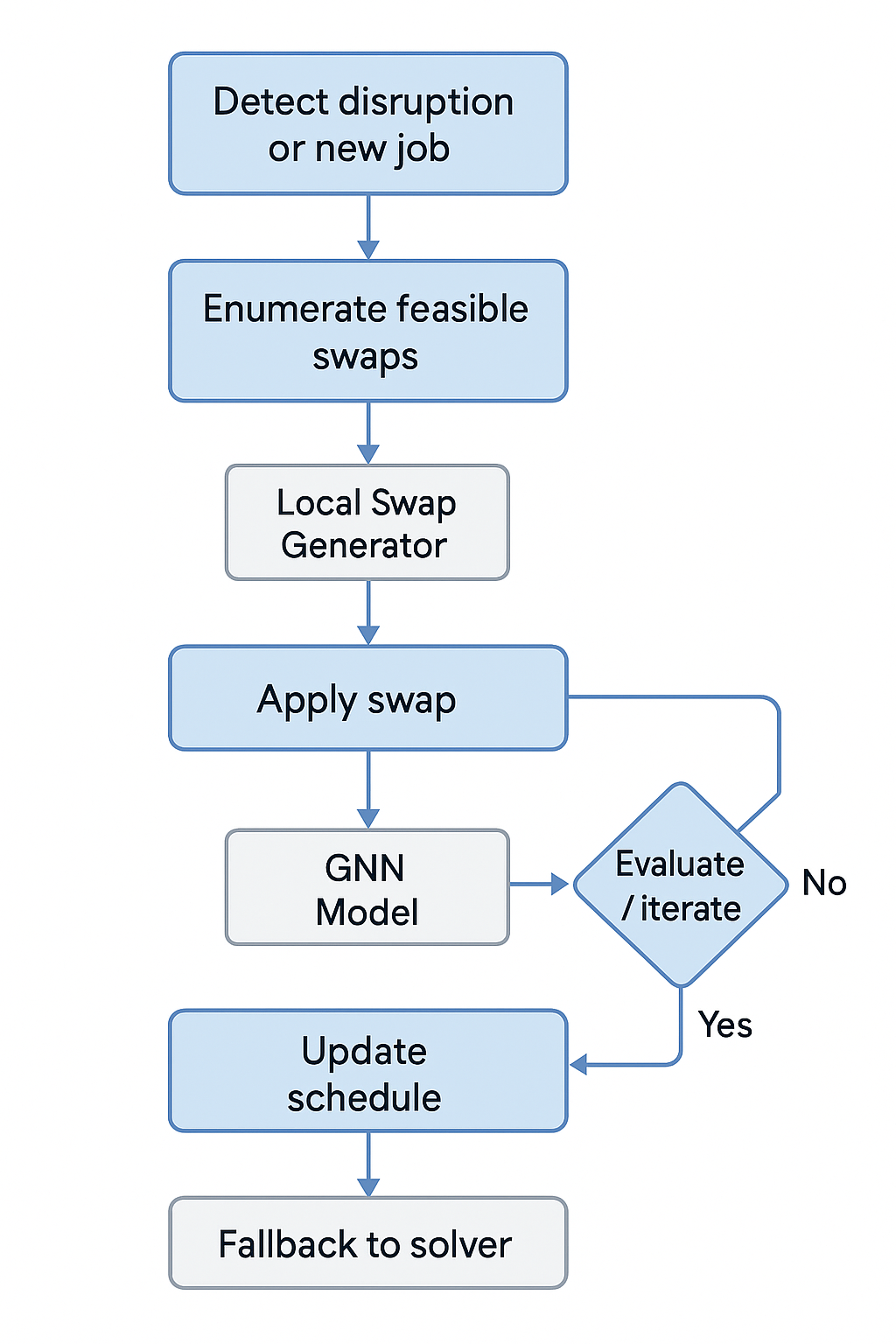

Inference workflow in production

- Detect disruption or new job.

- Enumerate a small neighborhood of feasible swaps (classic local‑search operators).

- Feed the set to the trained GNN; receive scores in <50 ms.

- Apply the top‑ranked swap; optionally repeat K iterations or hand back to the solver if quality target unmet.

This hybrid loop achieves near‑optimal repairs in a bounded time budget and keeps the full model solve as an exception path, not the default.

Governance and integration

- Constraint safety net: pair with a constraint registry so recommended swaps are auto‑screened for rule violations.

- Drift monitoring: log prediction error vs. realized improvement; retrain when the gap grows.

- Explainability: attach attention weights to graph edges to show which resource conflicts drove the suggestion, helping planners accept the AI’s advice.

Key technical challenges

- Swap enumeration explosion: use heuristics or learned proposals to keep the candidate set O(N log N).

- Cold‑start for new policies: pre‑train on synthetic data, then fine‑tune online.

- Multi‑objective trade‑offs: extend the label to a weighted vector (cost, fairness, carbon) and let the GNN learn Pareto‑dominance ordering.

Graph neural networks let you learn a fast, smart “spell‑checker” for schedules: it spots the one or two swaps that move the objective most, leaving heavyweight re‑optimization for the few cases that truly need it.

Companies that embed this layer reduce disruption response times, lower cloud costs, and keep human planners focused on strategic exceptions.